Developer platform GitLab today announced a new AI-driven security feature that uses a large language model to explain potential vulnerabilities to developers, with plans to expand this to automatically resolve these vulnerabilities using AI in the future.

Earlier this month, the company announced a new experimental tool that explains code to a developer — similar to the new security feature GitLab announced — and a new experimental feature that automatically summarizes issue comments. In this context, it’s also worth noting that GitLab already launched a code completion tool, which is now available to GitLab Ultimate and Premium users, and its ML-based suggested reviewers feature last year.

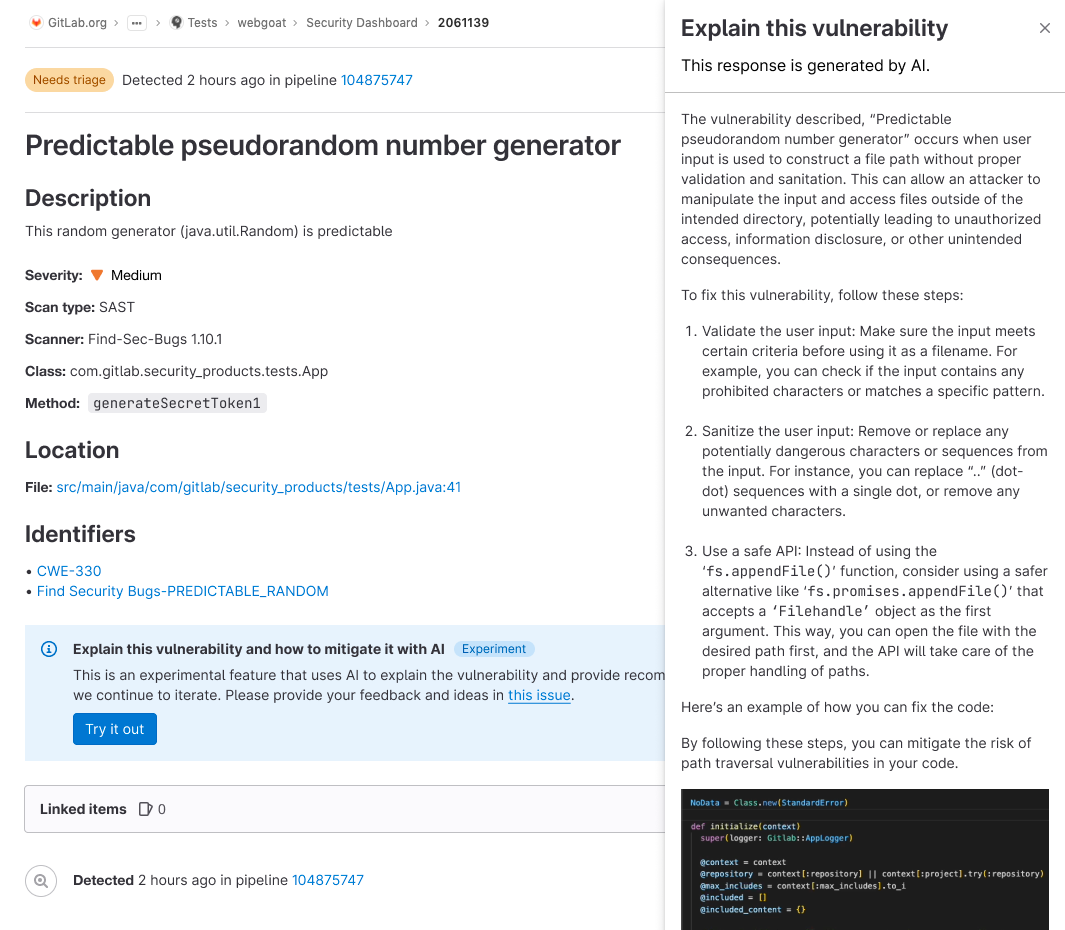

The new “explain this vulnerability” feature will try to help teams find the best way to fix a vulnerability within the context of code base. It’s this context that makes the difference here, as the tool is able to combine the basic info about the vulnerability with specific insights from the user’s code. This should make it easier and faster to remediate these issues.

The company calls its overall philosophy behind adding AI features “velocity with guardrails,” that is, the combination of AI code and test generation backed by the company’s full-stack DevSecOps platform to ensure that whatever the AI generates can be deployed safely.

GitLab also stressed that all of its AI features are built with privacy in mind. “If we are touching your intellectual property, which is code, we are only going to be sending that to a model that is GitLabs or is within the GitLab cloud architecture,” GitLab CPO David DeSanto told me. “The reason why that’s important to us — and this goes back to enterprise DevSecOps — is that our customers are heavily regulated. Our customers are usually very security and compliance conscious, and we knew we could not build a code suggestions solution that required us sending it to a third-party AI.” He also noted that GitLab won’t use its customers’ private data to train its models.

DeSanto stressed that GitLab’s overall goal for its AI initiative is to 10x efficiency — and not just the efficiency of the individual developer but the overall development lifecycle. As he rightly noted, even if you could 100x a developer’s productivity, inefficiencies further downstream in reviewing that code and putting it into production could easily negate that.

“If development is 20% of the life cycle, even if we make that 50% more effective, you’re not really going to feel it,” DeSanto said. “Now, if we make the security teams, the operations teams, the compliance teams also more efficient, then as an organization, you’re going to see it.”

The “explain this code” feature, for example, has turned out to be quite useful not just for developers but also QA and security teams, which now get a better understanding of what they should test. That, surely, was also why GitLab expanded it to explain vulnerabilities as well. In the long run, the idea here is to build features to help these teams automatically generate unit tests and security reviews — which would then be integrated into the overall GitLab platform.

According to GitLab’s recent DevSecOps report, 65% of developers are already using AI and ML in their testing efforts or plan to do so within the next three years. Already, 36% of teams use an AI/ML tool to check their code before code reviewers even see it.

“Given the resource constraints DevSecOps teams face, automation and artificial intelligence become a strategic resource,” GitLab’s Dave Steer writes in today’s announcement. “Our DevSecOps Platform helps teams fill critical gaps while automatically enforcing policies, applying compliance frameworks, performing security tests using GitLab’s automation capabilities, and providing AI assisted recommendations – which frees up resources.”

GitLab’s new security feature uses AI to explain vulnerabilities to developers by Frederic Lardinois originally published on TechCrunch

from TechCrunch https://ift.tt/hH6F1gR

0 Comments